As technology gets more integrated with human biology, Elon Musk’s Neuralink works at the edge of a revolutionary and controversial field. With the development of brain-computer interfaces (BCIs) science fiction fantasies are transforming to reality. This leap in neuroscience triggers debates about data ownership, privacy, and individual’s rights over their neural information.

In this blog, we will delve into the intricacies of brain data privacy and try to answer the question, who possesses the data when a device is capable of reading one’s thoughts?

The Blurred Lines of Neural Data Ownership

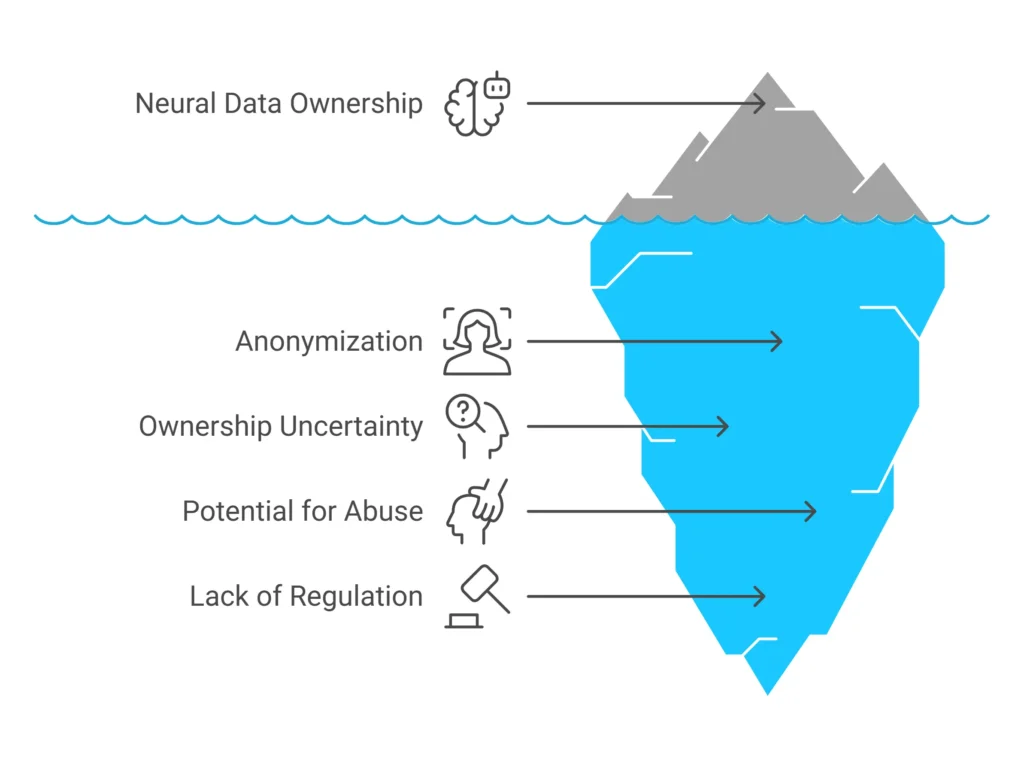

Conditions of service of Neuralink permit the anonymization of the data of the users, but unfortunately, not so clearly whether the raw neural signals of the users are owned as ultimate owner of the data in the devices. This uncertainty is a nightmare with the very real possibility that your decoded “thoughts” might own you, paving the way for abuse on an unprecedented scale.

Unlike general medical devices, Neuralink represents the frontier of medical and consumer devices. Medical brain signals are governed by US HIPAA law, whereas consumer neurotech devices generally operate in regulatory gray space, allowing companies to possibly collect, analyze, and even sell neural signals with no overt prior consent.

Dr. Rafael Yuste of Columbia University and the Neurorights Foundation cautions: “Your brain activity contains your thoughts, memories, and identity—information more personal than your DNA. Without proper regulations, companies could extract and monetize this data without users fully understanding the implications.”

Emerging Legal Frameworks

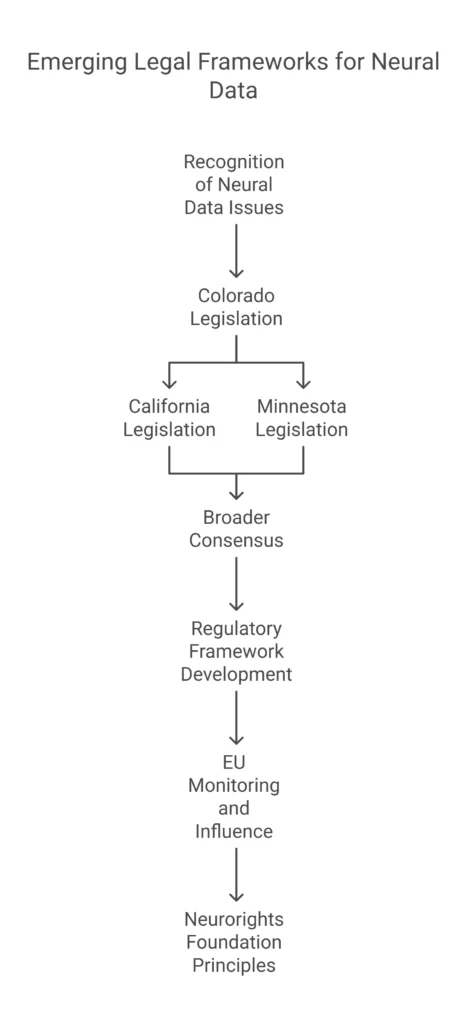

Seeing the detrimental effects the neural privacy has on the public in general, there is an effort that the law is moving from the back alleys to the right hand lane constructively. In April 2024, Colorado became the first in the country to legally categorize neural data as ‘sensitive’ and obtain explicit consent to and from its collection and application.

This landmark legislation sets forth that brain data should be afforded a higher level of protection than everyday personal information.

After the Colorado model, comparable legislative implementations in California and Minnesota have been introduced as a trend of a broadening consensus that neural data must receive an unprecedented legal status, i.e., be legally protected. These legislative provisions are the initial step of a regulatory framework that some policy makers have begun to envisage will extend to the use of neurotechnology.

As well, the European Union has been closely monitoring how that topic has developed, e.g. As the Five Neurorights of the Neurorights Foundation, i.e., for example, protection of mental privacy or against algorithmic bias).These principles are now actively influencing policy debates throughout the EU.

The Corporate Data Exploitation Risk

While praising Neuralink’s objective of ‘unlocking human potential,’ one could” question the motive behind it, especially in light of past data revolutions that started off looking utopian in nature but ended up doing nothing more than harvesting data for profit.

Many social media platforms set out initially with the intention to connect people but evolved into highly advanced predictive and manipulative surveillance systems.

The possibilities of these systems being misused amplify further by the advancement of technology. Neural interfaces grant direct access to thought processes.

The danger is not only regarding privacy issues, but the liberty of thoughts as well. Dr Emily Martinez, a researcher in digital ethics states. ‘The conflict surfaces when these companies invoke potential interference with activity within the neural space. Then the line which segregates your thoughts from external interference becomes precariously faint.’”

Security Vulnerabilities and Threat Landscape

In addition to privacy issues, there are security challenges arising from neural interfaces. Brain data is one of the most personal data that can be seized by cyber criminals.

Security researchers have warned that a failure of a neural interface may pave the way to a plethora of new, previously unimaginable, scenarios of identity theft, behavioral control, and even of control over the subject’s own mental processes. Unlike typical data breaches, in which a password reset can be recovered, for neural data attacks it might be the case that they do and this arrives at the deep, irreversible state.

It has recently been demonstrated that anonymized brain data can be modeled using pattern analysis, and, in a completely natural way, the question arises, whether truly anonymized neural information can be obtained.

Neuralink’s Trust Deficit

The history of Elon Musk’s data manipulation has put in question whether Neuralink actually cares about privacy. After the scandal surrounding the treatment of data by Twitter (formerly X) while CEO, there is widespread anxiety that it is a mistake to give brain data to companies such as those managed by Musk.

These issues are magnified by reports of alleged animal abuse events in the course of the Neuralink experimental study, which totals approximately 1,500 deaths. If true, these charges have also been a source of undue pressure on the company and all of its stakeholders, to reconsider the high standards of ethics for the company.

At present, an FDA inquiry about Neuralink’s failure to close the wire through that interface has an additional effect upon the credibility of the company to deliver a technology of that magnitude, i.e., direct brain interfaces.

Comparing Neural Privacy Approaches

Different stakeholders in the neurotech space have adopted varying approaches to data privacy and ownership. The following table illustrates how major entities position themselves on crucial neural privacy issues:

| Entity | Data Ownership Model | User Control | Regulatory Compliance | Transparency Level | Commercial Use of Data |

| Neuralink | Ambiguous; company may retain rights | Limited opt-out options | Minimal beyond FDA device approval | Low; limited disclosures | Potential for extensive commercialization |

| Academic BCI Research | Typically user-owned with research license | Comprehensive consent required | IRB oversight and ethical guidelines | High; publishing requirements | Limited to research purposes |

| Colorado Law (2024) | User explicitly owns neural data | Opt-in required for collection | State-level enforcement | Mandated disclosures | Restricted without explicit consent |

| EU Proposed Framework | User maintains fundamental rights | Strong consent requirements | Comprehensive regulatory structure | High transparency mandated | Heavily restricted |

| Meta’s Brain Interface | Company claims limited processing rights | Opt-in with partial controls | Follows general data protection rules | Medium; selective disclosures | Acknowledged commercial applications |

This comparison reveals significant disparities in how different entities approach neural data protection, highlighting the urgent need for standardized frameworks that prioritize user rights.

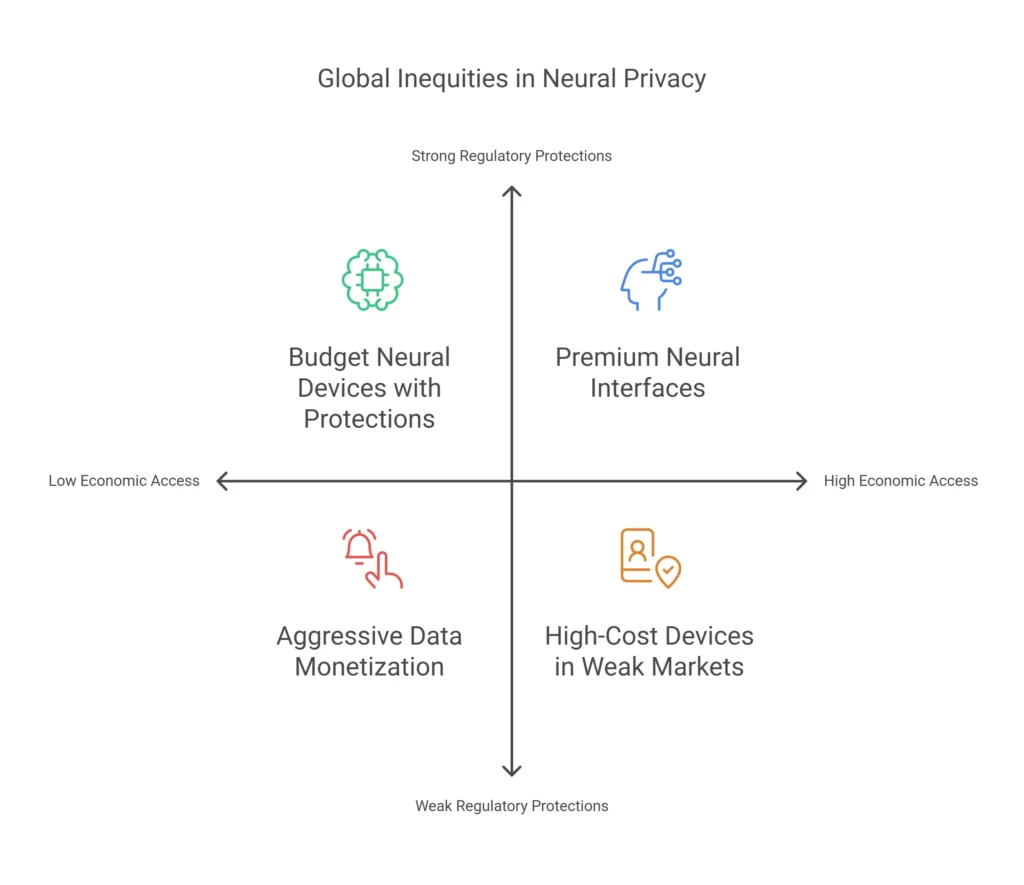

Global Inequities in Neural Privacy

The emergence of commercial neurotech raises profound questions about global access and equity. Experts warn that without appropriate regulations, neural interfaces could exacerbate existing social divides, with premium privacy protections available only to wealthy individuals while vulnerable populations face greater risks of data exploitation.

“We’re seeing the potential for a two-tier system of neural privacy,” notes Dr. James Wong, international technology policy expert. “High-income individuals may access premium interfaces with robust privacy guarantees, while budget devices targeted at broader markets could subsidize their lower cost through aggressive data monetization.”

This inequality extends beyond economic dimensions to encompass geographical and regulatory disparities. Regions with strong data protection frameworks may secure certain safeguards for citizens, while those in areas with limited regulations could become testing grounds for more invasive neural data practices.

The Shifting Public Perception

As new technologies emerge, society’s concern towards neural privacy increases and shifts rapidly. Recent studies show an alarming number of people analogizing neural data with DNA privacy, as there is an increasing number of people willing to consider brain information sensitive data that is more than a mere personal file.

This shift occurred at a much faster pace in 2024-2025 as consumer neurotech applications such as emotion tracking dating apps and focus enhancing wearables were made available to the public. People are debating the regulations needed to be placed on data collection and monitoring, as these technologies make it possible to track and monitor day to day activities.

Tech Ethicist Dr. Sophia Chen, “I believe we are seeing a huge shift in how people perceive the privacy of their information. There are those who are comfortable sharing social media, and these people actively question the sharing of their neural patterns. This suggests that there is an intuitive understanding that data pertaining to one’s brain resides in a different category altogether.”

The Road Ahead: Building Ethical Frameworks

The dire need for regulating neurotechnology makes it a challenging, yet, beneficial industry. There is a risk that without sufficient governance frameworks, the emergence of bad actors harnessing neural interfaces may occur.

The following principles can be outlined as basic rules for ethical development of neurotechnology:

- Explicit Consent: Users’ consent must be informed, detailed and acquired separately for various aspects of employing neural resources.

- Data Minimization: Businesses are to obtain from their customers only such neural materials as will meet claims set forth.

- Purpose Limitation: Consent must be obtained for each new objective that is different from the initial one.

- Algorithmic Transparency: Users must be able to understand how their neural data information is representative for the decisions given in algorithms. Right to Cognitive.

- Liberty: Users shall not be subjected to processes that may influence their thinking against their will.

The Neurorights Foundation has been instrumental in articulating these principles, advocating for a human-centered approach to neural technology that prioritizes individual autonomy and wellbeing over commercial interests.

Conclusion: Claiming Ownership of Our Neural Future

At the end of the day, the question “Who owns your brain data?” can be answered very simply: you. This principle is crucial in making sure that powerful technologies do not disempower and impoverish human wellbeing. At this point in time, the decisions we make regarding neural privacy will speak volumes about our technological aspirations and values as a society.

Never mind the dire effects, the potential of Neuralink and such technologies remaining unregulated is staggering. And so it is equally imperative that we exercise great caution in governing the evolution and use of such technologies.

In the end, the question ‘Who owns your brain data?’ must be answered without ambiguity: you do. This principle is fundamental for establishing the development of neurotech. Ensuring that these powerful technologies aid rather than undermine human agency and wellbeing, necessitates this as the core principle. The decisions we make at neural privacy will show a lot about where we are as a society.

We are at a very important point right now; the choices we make are incredibly impactful,“The potential of technologies like Neuralink is amazing. However, the responsibility which accompanies their development is equally incredible. The technology’s potential may be Dependent on the amount of insight we possess when creating regulations on their advancement and usage.”

Pingback: AI Manipulation via BCIs: Are We Ready for 2025? - 2025 Cybersecurity And Threat Intelligence

Pingback: The Evolution of Brain-Computer Interfaces - 2025 Cybersecurity And Threat Intelligence

Very efficiently written post. It will be useful to everyone who usess it, as well as yours truly :). Keep doing what you are doing – i will definitely read more posts.

Thanks for another excellent post. Where else could anybody get that kind of information in such an ideal way of writing? I have a presentation next week, and I’m on the look for such information.